Why are viruses so hard to count?

The plaque assay needs a successor. What will it look like?

Note: The data and findings that I cite in this piece are current as of March 22, 2025. To understand any of these concepts deeper, I always recommend reading the latest literature or other primary sources.

Table of Contents

When I Nearly Lost my Composure

The Viral Measuring Cup

The Landscape of Today’s Assays

The Recipe for an Effective Viral Assay

Revamping the Viral Measuring Cup

When I Nearly Lost my Composure…

From speaking with friends and mentors in virology, I often hear that the plaque assay is an acquired taste. If so, I think I’ve yet to acquire anything.

It was last summer. After a day of nonstop experiments, I realized long after sunset that I completely forgot to run a plaque assay due tomorrow. It was going to be a long night.

That evening, I cultured up bacteria. I melted agar in the microwave. To save time, I even diluted our phages during the wait.

Nearly three hours later, I was struggling to keep my eyes open as I dispensed the final drops of solution onto my plate. After tidying up and snapping off my gloves, I fumbled for my phone and gasped at the time: 2:14 AM. As I surveyed my surroundings with heavy eyes, I reasoned that I was the only human left in the building.

On the frosty walk back home, I couldn’t help but feel confused. When physicists can detect gravitational waves and chemists work with advanced spectrometers, why were virologists still stuck in the dark ages of cell cultures and agar? Why did the plaque assay still feel like alchemy?

That was my original inspiration for the piece. At first, I was just looking for shortcuts to the plaque assay—tricks and hacks that would make the process simpler. But as I sifted through the history and literature of measuring viruses, I found something more profound and disturbing.

Plaque assays (and most other assays to count viruses) haven’t fundamentally changed since they were developed. And they aren’t just tedious—they’re surprisingly variable, slow, and unstandardized. While the plaque assay arguably kicked off modern virology, we should be frustrated that it’s still our tool of choice. With sharper, more precise assays, we can begin moving towards better virology—but only if we rethink the paradigms of counting viruses.

Of course, there’s some subtext here. To make sense of it all, let’s return to when the plaque assay was developed.

The Viral Measuring Cup

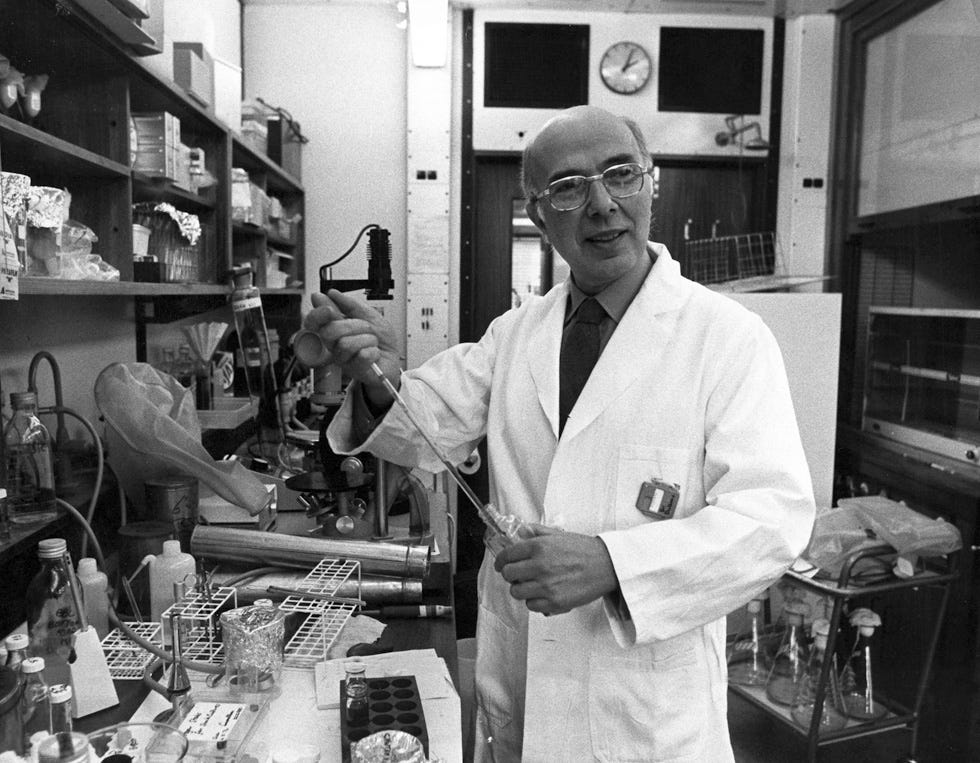

In 1952, the Italian virologist Renato Dulbecco was struggling to count.

Of course, this wasn't normal counting. As he gingerly traced out and annotated his experiments, Dulbecco was mulling over how to count viruses.

In some sense, this was the quéstion du jour in virology. At the time, microbiologists like Félix D’Herelle had already devised elegant tests to count bacterial viruses.

Meanwhile, the rest of the viral world — and animal viruses in particular — remained temptingly out of reach. Without a reliable mechanism to count viruses, the field still resembled a pseudoscience.

And so, Dulbecco was hard at work. Using his skills in cell culture, he realized that when chicken embryo fibroblasts were exposed to the eastern equine encephalitis virus (EEV), they produced hotspots where host cells were annihilated, which we now know as plaques.

This was the first plaque assay for an animal virus, and it’s now a mainstay in virology.

Back in the 50s, Dulbecco’s contribution to microbiology was revolutionary. At the time, the plaque assay was the only method that could convincingly and quantitatively measure animal viruses. Its existence enabled the development and testing of virtually every antiviral and vaccine that followed.

And yet, I wonder how optimistic Dulbecco would feel seeing his test being used today. The uncomfortable truth is that the plaque assay we use today is practically the same as it was half a century ago. The optics might be fancier, but the test is still lengthy, volatile, and panic-inducing.

Could it be that virological measurement stagnated with Dulbecco? Is anyone thinking about a better way to count viruses?

The Landscape of Today's Assays

But perhaps we’re getting ahead of ourselves. To equate viral measurement with the plaque assay isn’t entirely fair.

Before we think about advancing Dulbecco’s work, it helps to reflect on how else we’ve managed to quantify viruses.

Polymerase Chain Reaction (PCR)

Originally developed in the 1980s, PCR machines are now commonplace in microbiology labs worldwide. By repeatedly amplifying fragments of viral DNA (or RNA, in the case of RT-PCR) in a process that resembles natural DNA replication, PCR is remarkably sensitive—detecting as few as 100 viral copies/mL of analyte.

Notably, PCR provides the immediate upshot of accessibility and speed. As I just hinted, the hardware required for PCR is nearly ubiquitous, and training is widely available. As a well-optimized, enzymatic assay, PCR is also relatively fast—and with the introduction of techniques like extreme PCR, it is now possible to quantify viruses in under a minute.

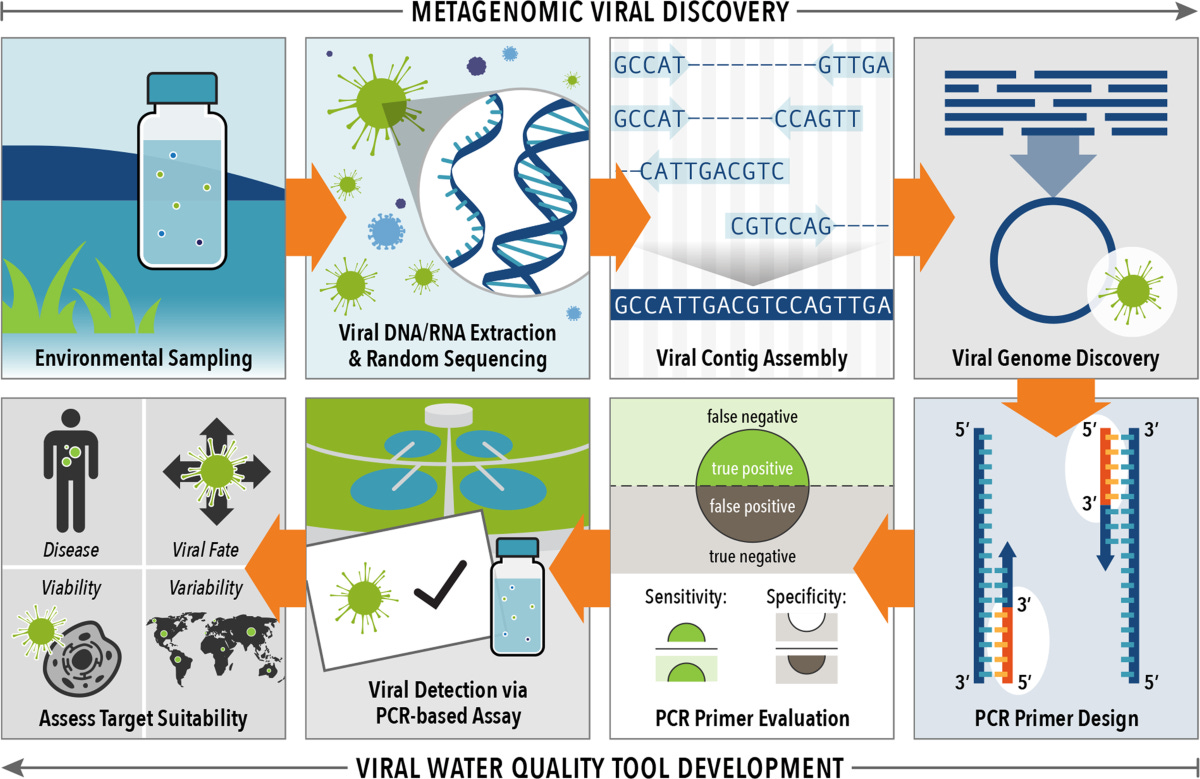

This also comes with inherent drawbacks. Perhaps the most glaring concern is that PCR is only possible with pre-designed primers, which is expensive and only possible when detecting viruses with known sequences. For now, this means we can’t use PCR to discover novel viruses.

It’s also worth noting that the readout of PCR is fundamentally different from the plaque assay, since it reflects the number of viruses and not necessarily the number of infectious particles1. And yet, measuring infectious particles is crucial to most clinically relevant applications—from vaccine design to phage therapy.

Focus-Forming Assay (FFA)

In some sense, the focus-forming assay (FFA) is the immunochemical cousin of the plaque assay.

Unlike a standard plaque assay, FFA relies on antibodies specific to the virus of interest, allowing them to bind to clusters of viral particles (foci). With the addition of secondary antibodies conjugated with enzymes (i.e. horseradish peroxidase), we can couple viral infection to a colorimetric readout that’s detectable under the microscope2.

The main benefit here is versatility. Whereas the plaque assay can only form plaques with the lysis of host cells, FFA doesn’t require cell death to produce a readout. Since many viruses aren’t lethal to cells (i.e. filamentous phages), this suggests that FFA might be responsive to a broader spectrum of the viral world.

And yet, I’d argue that FFA still suffers from the viral version of the founder’s dilemma. For the same reason that PCR requires an existing primer for viral DNA/RNA, FFA is only possible with functional antibodies against a virus. While we might find antibodies against popular, well-characterized viruses, we can’t expect the same to hold for emerging strains, and certainly not if we’re trying to discover novel viruses with unknown properties.

With assays like these, we generally need old knowledge to generate new knowledge. This can be deceptively challenging.

Tissue Culture Infectious Dose 50 (TCID50)

Much like the focus-forming assay, TCID50 is a mainstay in animal virology that lets us quantify non-lethal viruses. Rather than counting viruses directly, TCID50 detects damage to host cells through a visual endpoint known as cytopathic effect (CPE).

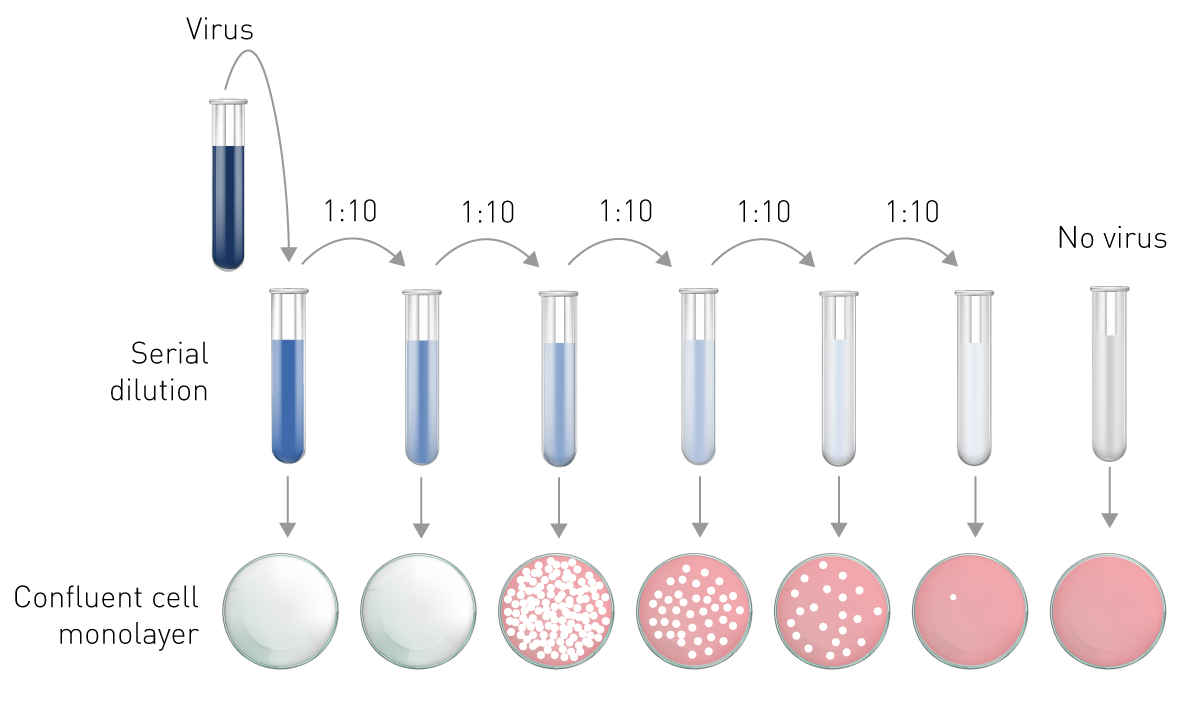

Often, infections are performed on microplates and cellular damage can be automatically assessed under the microscope. By plotting varying concentrations of a virus against the fraction of cultures with CPE, we can then interpolate a TCID50 value — the critical dose at which we’d expect to see cytopathic effect in exactly half of our samples.

At first glance, TCID50 seems to combine the best of the plaque assay and FFA. It lets us rapidly quantify the number of infectious particles, even if we’re not sure what the virus is. And since so much of the assay is streamlined with software, it’s affordable and fast enough to perform at scale—especially useful for high-throughput screening or discovery.

Still, TCID50 isn’t immune to the general drawbacks of microbiology. Depending on the host, TCID50 can take days to prepare.

It's also worth noting “cellular damage” is a subjective term. It’s entirely possible to infect the same population of host cells with two different viruses and get wildly different TCID50 values, even if the absolute number of viruses was identical. Some viruses are just inherently more destructive to cells than others. And since TCID50 doesn’t count viruses directly, this is an artifact we’re still struggling to grapple with.

Too Many Chefs (Tests) in the Kitchen

But there’s a broader, more glaring issue people don’t talk about: variability.

As elegant and popular as it is, the plaque assay is a postcard of this phenomenon. Since the formation of even a single extra plaque could create significant deviations, most researchers acknowledge that its readings are only accurate to the order of +/-0.5 log (~3-fold). Frankly, this is absurd.

Consider your tolerance for error in practically any other assay. If your PCR machine messed up your read counts by a factor of three, you’d ask for a replacement. If your photon counter messed up your flux by threefold, you’d throw it in the trash. And yet, when it comes to quantifying viruses — one of the few places where accuracy genuinely matters—we accept this error as standard practice.

Of course, the plaque assay is hardly alone in this. Not only do our other virological assays differ wildly across measurements, but also from each other.

In 2022, Zapata et al. compared three assays (PCR, plaque assay, and TCID50) to measure identical stocks of SARS-CoV-2 particles. The result was a staggering 0.6-log (~4-fold) variation between tests. Frankly, this is absurd.

This is more than an issue of instrumentation. Without a standardized, quantitative assay, it’s difficult to call any research scientific. How can you expect to verify your hypothesis if you can’t trust your measurements?

Every day, chemists quantify volatile industrial gases at parts per trillion. Physicists routinely count individual photons in reagent-grade dark rooms. Why does virology settle for back-of-the-envelope titrations?

The Recipe for an Effective Viral Assay

Zooming out and studying the methods we use today is surprisingly helpful. If nothing else, it helps us understand where the status quo is lacking. It also leads us to another question: if there were an ideal method to quantify viruses, what would it look like?

From my own exploits in the lab (and from surveying the systems that exist today), I narrowed it down: the ideal test to count viruses would be quick, reliable, and agnostic.

Let’s dive into this deeper.

Effective Assays are Agnostic

To phrase this cleanly, any solid approach to counting viruses should be universal. We want an assay that’s responsive to any virus, including viruses never seen.

“Wait, why is this important? If we know the specific properties of the virus we want to count, why can’t we use it in a test?”

To illustrate this, I often rely on Nassim Taleb’s classic allegory of the black swan:

Imagine paddling down a lake in search of swans. If you went into this expedition thinking that a swan could only be a white bird with a long neck, you’d row past a row of black swans without a second thought. The problem wasn’t your vision. It’s just that your definition was too narrow.

Targeting specific viruses only works in the narrow case where we know exactly what virus we’re looking for and how to target it. But what if our sample actually contained more than one virus?

Or, what if that sample contained a completely novel virus? If our test selected viruses based on a known receptor, host, or molecular quirk, it would be completely blind to other viruses.

But this is where all the action is. Even our most conservative estimates suggest that >99.9% of viruses haven’t even been documented. A viral assay that’s blind to anything outside its search space isn’t a future-proof solution.

In practice, this means an effective assay can’t use antibodies, molecules, or tags that are specific to anything. Instead, it must detect a property that’s universal (or nearly universal) in all viruses, but not present in everything else.

With some idea about the unfathomable diversity of viruses, I think finding features like these would be difficult. But I certainly don’t think it would be impossible.

As a primitive proof, consider an electron micrograph of a viral sample.

Of course, micrography is already well-optimized and more than doable today. From here, it’s no stretch to imagine training a computer vision model to detect and count viral particles (much like the copilot-style models in microscopes that already enable cell counting today). -If we repeated and averaged this process over a wide enough area, the resulting value would be the closest thing we have to a ground-truth viral count3.

Relying on a hairy, object-detection algorithm almost seems like cheating, but it points to an important truth: despite their diversity, most viruses possess a distinct scale and structure. And these features are distinctive enough for algorithms to tell a virus apart from what isn’t. This already holds one hint to agnostic detection.

And if this is true, what other common, measurable features stretch across the viral world? Could it be morphology? Conserved regions of capsid protein? If we’ve already been able to name plausible features, what else might we be missing?

In some sense, more experimental methods like flow virometry and tunable-resistive pulse sensing (TRPS) can already detect viruses based on global features like size and impedance4. But in practice, even these systems leave certain boxes unchecked.

Effective Assays are Quick

When we dismantle the plaque assay, it becomes clear the rate-limiting step isn't preparing materials or spotting plates. It's culturing the host.

Depending on whether the host is bacteria (in phage biology) or mammalian cells (as it is in most virology), this step can range from hours to weeks.

Effectively, getting a faster readout means one of two things. We either find some mechanism to speed up host growth, or design a test that doesn't require infection to measure viral particles.

With the current state of biology (and the potentially unpredictable downstream effects of speeding up cell growth) the latter makes more sense to me.

But perhaps this presents a tradeoff. Methods like PCR, for instance, let us quantify viruses without infection, but can't count the number of infectious particles. And yet, this is exactly the kind of numerical information we need to answer important clinical questions.

Flipping this approach gives us the plaque assay or focus-forming assay, which can estimate the number of infectious particles, often at the expense of speed. If we want to count the number of viruses that can replicate, we need to infect a host. And this forces us to revisit the same problem as before: culturing a host isn't smooth sailing.

This was perhaps the single, most reproducible pattern that emerged from my research. Essentially, I realized that every viral assay could be categorized as infective or non-infective. There were the plaque assays and endpoint titers, and there were the ELISAs, the PCRs, and the fluorescence hybridization assays.

But this made something else glaringly clear: each of these tests may bear different names and optics, but they were effectively built on the same paradigm. Our tradition of measuring the same viruses and merely swapping the endpoints (i.e. fluorescence vs. color) is inviting stagnation.

The longer our tests continue tap-dancing around existing paradigms, the longer we'll continue tap-dancing around existing results. To me, the path to a faster test begins with transcending current assumptions. What if there was a way to measure infectious particles without culturing a host?

Effective Assays are Reliable

When I use the term reliability, I’m referring to some arbitrary combination of accuracy (how close our measurement is to the true value) and precision (how closely repeated measurements agree with each other).

The bottom line is the same: how trustworthy is this test? If you could bet on how close this test was to the true value over and over, how much would you gamble?

As a titration assay, this is where the plaque assay consistently underperforms. At the lowest dilution, just a single unintended viral particle could double the number of plaques you observe.

Of course, this isn’t all. Every step leading up to and after dilution is variable—from the density of your bacteria to the incubation time, and everything in between. And from my experience conducting simultaneous plaque assays on the same sample, I can attest that the readouts can vary even more.

From an engineering perspective, this offers us two main levers. We could make each step in our assay more reliable, or reduce the number of steps to prevent our error from multiplying.

Today, automation is becoming an increasingly tempting strategy here. With the increasing roboticization of labwork through startups like Emerald, it’s already possible to routinize the most error-prone steps in the plaque assay—from serial dilution to incubation.

Somehow, I still think this falls short. Even if precision machines lower our sources of avoidable error, it doesn’t change the nature of the assay. Again, I think the more radical approach is to count viruses without infection. Since culturing, infecting, and incubating host cells still form the bulk of a plaque assay, eliminating these steps would also prevent their error from accumulating. Fewer moving parts means fewer points of failure.

Intuitively, I see the scientific community moving away from the messy world of microbiology and toward physical and chemical tests. For now, techniques like impedance sensing and flow virometry already have the potential to estimate functional viruses without a host. And as the cost of hardware and training continues to fall with time, I think these approaches will only gain momentum.

For what it’s worth, I think it’s impossible to imagine any future without being at least somewhat constrained by the present. Still, based on the knowledge we have today, this is the future I would bet on.

Revamping the Viral Measuring Cup

I went into this story trying to make a better plaque assay. Eventually, I realized that I was asking the wrong question.

Over the centuries, science has been shaped by what we can measure and count

Astronomy, for example, wouldn’t have been possible without the compound telescope. Modern biology, genetics, and medicine could only emerge when the microscope let us envision the cell. Without the laser interferometer, we couldn’t have proven the existence of gravitational waves. As scientists, we can only rely on what we measure. For us, tests aren’t just instruments — they genuinely define and limit how we see the world.

I’m not attempting to claim that plaque assay is useless. If anything, I still respect it as one of the most elegant and influential tests in virology. It enabled basic science on HIV, SARS-CoV-2, and phages that singlehandedly saved millions of lives.

All I’m claiming is that plaque assay, like any assay, needs a successor. -As quantification technology advances in physics, chemistry, and virtually every other field of biology, why do virologists still settle for the same tool? How come the tool of our trade hasn’t changed in decades?

Better science starts with better tools—it’s time for virology to level up. -What we need isn’t a marginally better plaque assay.

As biology continues pushing for massive data collection and analysis, we need a fundamentally different assay to measure viruses. In the next decade, I predict we’ll have tests that can reliably measure infectious particles in hours, without requiring host infections. While all sorts of techniques could enable this, the most reasonable path seems to be repurposing viral flow and impedance-based systems, or counting viruses automatically via microscopy.

Whatever the route, this should enable us to identify and count viruses at an unprecedented rate, ushering in an era where we can make real progress toward mapping the viral world.

Times are changing. Why aren’t our tests?

Footnotes

Because viral replication is notoriously error-prone, there’s often a massive difference between the number of genomes and the number of functional, infectious particles (a relationship described by virologists through the particle: pfu ratio). For context, even the highest fidelity viruses (i.e. alphaviruses like EEV) have a particle: pfu ratio of 1-2, meaning up to 50% of replicated particles can still be defective.

While the endpoint of the FFA is visual, its working principle relies on primary and secondary antibodies, surprisingly resembling an ELISA experiment. It’s surprising what you can do with the right antibody—that is, assuming it exists and you can get it to work on the bench.

For what it's worth, quantitative electron microscopy is already being explored for viral quantification. While I don't foresee the hardware costs falling significantly anytime soon, advances in software and training might make this approach feasible in labs already equipped with microscopes.

It’s helpful to think of TRPS as a cross of a nanopore and impedance sensor. Viruses flow rapidly through a membrane while a sensor measures the electrical impedance of individual particles that cross it.

Until a SINGLE virus of ANY KIND has been ISOLATED IN THE HOST CELL ..there is no way to claim that viruses exist. The field is bunk, just like the field of string theory was found to be and just like "quantum physics" will be found to be.

In reply, link the study showing the isolation of a single virus in the host cell.

(There is no such study.)